I work on language model post-training, and am currently based in San Francisco.

Previously, I was an undergraduate at Princeton University, where I'm incredibly grateful to have been advised by Prof. Karthik Narasimhan, Dr. Shunyu Yao, and Prof. Benjamin Eysenbach. I also spent time at Google, Citadel Securities, and Redwood Research.

Some other interests: technology policy, AI for health, mechanism design, and cardistry. Feel free to reach out by emailing [x]@alumni.princeton.edu, where [x] is replaced by mwtang.

Selected Work

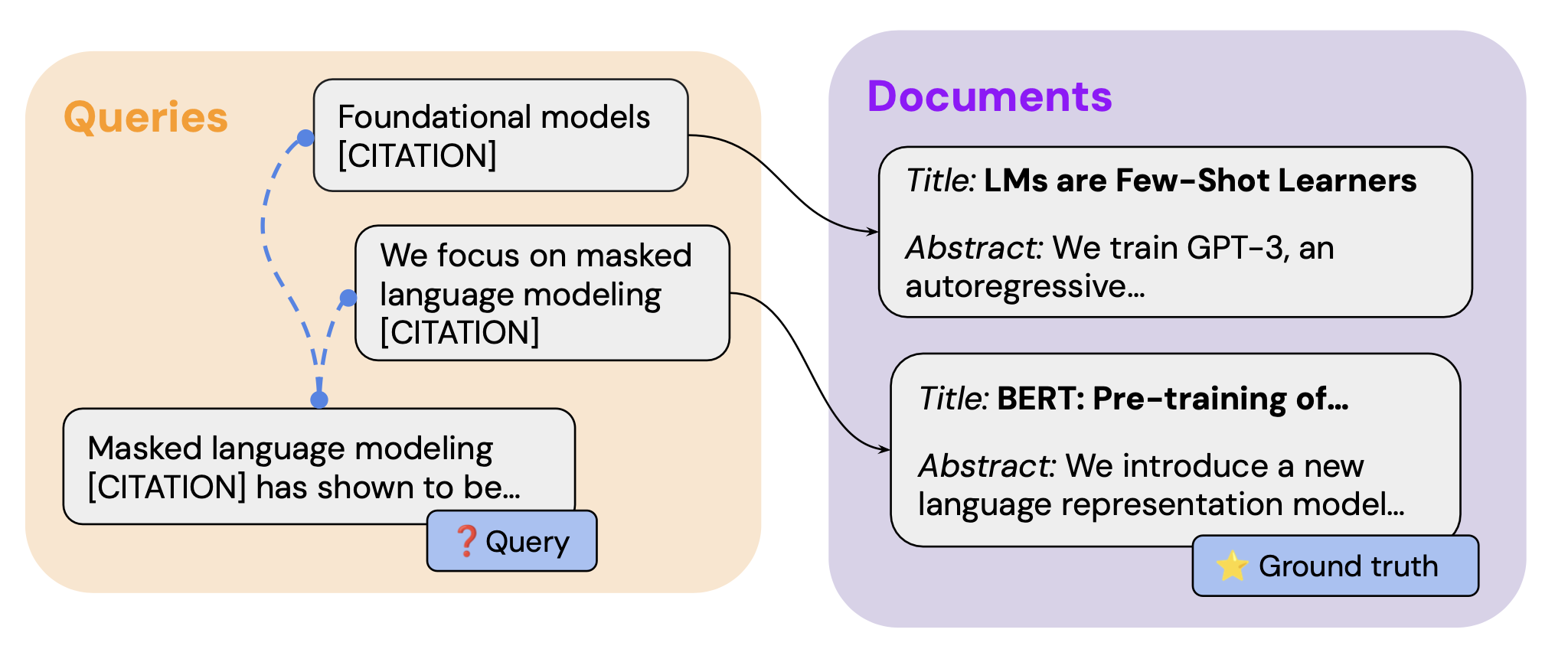

BRIGHT: Benchmarking Reasoning-Intensive Retrieval

Hongjin Su*, Howard Yen*, Mengzhou Xia*, Weijia Shi, Niklas Muennighoff,

Han-yu Wang, Haisu Liu, Quan Shi, Zachary S. Siegel, Michael Tang,

Ruoxi Sun, Jinsung Yoon, Sercan O. Arik, Danqi Chen, Tao Yu

ICLR 2025 (Spotlight)

paper |

code |

site |

tweet